On January 20, 2021, Tech for Good Canada convened a discussion, moderated by our director Caroline Isautier, designed to be an introduction for business leaders, investors and citizens on the why and how of implementing data privacy by design and security as a consequence in Tech deployment and business processes.

Below is a detailed recap of exchanges, with very useful nuggets of information. Video excerpts of our two hour discussion are being made available day by day on our YouTube channel. Below is an introduction.

This discussion stems from concerns about the tendency to design surveillance by default in too many Tech solutions today, whether for our professional or personal use. The ad-based business models that Silicon Valley has favored, with huge initial gains, and the ethics of « Go fast and break things » have instilled a culture of disregard for the user consent in the use of her/his data in Tech.

This era is coming to an end. Increasing data breaches and outrage over « data mining » without consent from some of the biggest Tech companies, namely Facebook, Google and Amazon is making privacy by design as important as security in the development of Tech solutions today.

Movies, series, YouTube videos and comedian skits about Tech surveillance are now mainstream. The breakthrough ‘Social Dilemma‘ documentary, out in September 2020, follows ‘Black Mirror‘, ‘Nothing to Hide‘ and “The Great Hack” as examples of popular culture denouncing citizen surveillance. For Canadian flavour, watch below or here this Baroness Von Sketch skit inspired by a visit to an Apple store.

Lawmakers are slowly catching up to this, with the European GDPR (General Data Protection Regulation) , the California Privacy Rights Act (CCPA) and the Brazilian LGPD (Lei Geralde Proteçãode Dados) .

Recently, the province of Québec proposed Bill 64 , while a Canadian Privacy Protection Act (CPPA) updating the existing PIPEDA act is in the works. [Update : Québec passed Bill 64 in September 2021, while Canada is on its way to passing an expanded privacy bill, Bill C-27 in June 2022] . These laws generally all now require companies to map out the data they’re collecting and declare data breaches. They further impose large fines on those that do not obtain consent to using individuals’ data or who over-collect or use that data in unfair ways. Additionally, they allow citizens to request a report on the information a company collects on them.

To tackle this emerging, definitely complex issue combining governance, legal and technical aspects, we brought together four complementary perspectives to the discussion moderated by Caroline Isautier, our Director. See their bios at the end of this article.

A New, Unregulated Private Surveillance We’re All Waking Up To

As Sal d’Agostino, a senior US expert on video surveillance and digital identity puts it: « the advertising technology used widely today is another kind of surveillance, not for public safety, but to maximize revenue ».

Most of us, as professionals and individuals, have accepted thus far, first because we weren’t conscious of it, then in exchange for innovation and convenience.

Nothing to Hide? Better Have Nothing to Say

Plus we have « nothing to hide », right? Abigail Dubiniecki, a top privacy expert and lawyer, reminded us privacy is a basic human right. When we are surveilled, we lose our freedom of action and bit by bit start changing our behaviour. The danger we are facing today in the business world is looking at privacy compliance as a legal issue, a ”tick-box” exercise without appreciating how fundamental privacy is to our everyday lives.

Is Privacy Compliance a Lawyer’s Purview? Its a company-wide Governance Issue

As Cat Coode indicated, “privacy by design” is literally the idea of designing your business practices to put customer and employee privacy first.

Therefore, privacy for companies, even if its now mandated by law, is not meant to be simply a legal reporting exercise. It is meant to be a useful investment to reduce the risk of breaches, fines and lawsuits. Its principles necessarily translate into technical implementations, some internal, many external, from third-party vendors.

« BOLTS » is the acronym Sal d’Agostino uses for: Business, Operations, Legal, Technical and Social aspects of a true privacy compliance effort.

Sadly, too much emphasis has been put in the past on purely legal compliance ( such as through a privacy policy). My personal analogy is that of opening an umbrella under a leaking roof. It won’t get you too far in the long term.

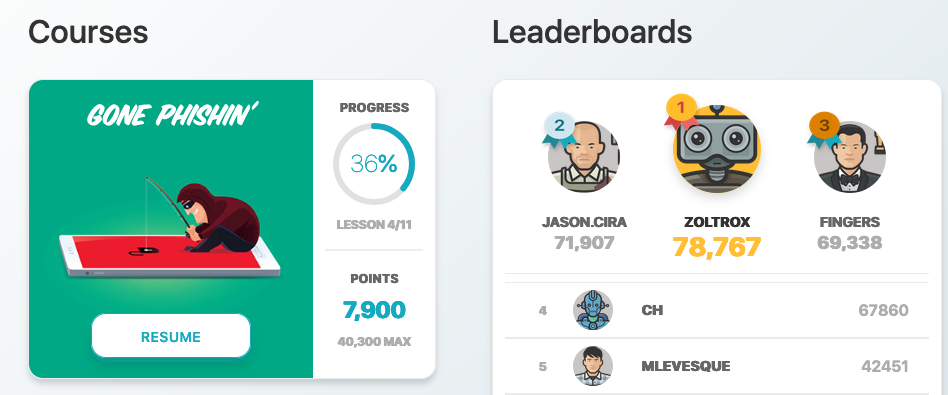

On the social aspect, Cat Coode, an engineer by profession turned privacy expert, added that employee training around regulations what they mean and why they are important – is a commonly overlooked area in compliance audits as privacy and security risks involve human error more often than not. Producing engaging training is one of the challenges expert Scott Wright of Click Armor is addressing!

Beyond that basic training, she says what’s missing today is Privacy by Design (PbD) training. Product managers should have an understanding of how to define PbD requirements and technologists should have an understanding of how best to design their systems to support privacy. Without that education, any policies or processes you try to put in place will not be well received.

What Is The Cost of Sloppy Privacy Compliance ?

Cat explains the case of a company in Europe that was fined 200 000 € for not complying with three requests from an individual to see their data, a right under GDPR ( and most privacy laws).

She indicates there are no examples of fines imposed in Canada yet, but the Québec Bill 64 in the works would change that. « I think the bigger impact is in breaches and damage to reputation », she adds. Witness the case of LifeLabs, the lab testing company that was hacked, as an example of a reputation-staining event that will make customer think twice about getting tested there. Likewise for LastPass, the password manager which was breached.

On another dimension, Anyvision was a facial recognition company with « odious » business practices, says Sal. Once this came out, Microsoft dropped their investment in them. « It can affect your hiring capabilities as well, especially regarding tech professionals », says Abigail.

Abigail adds even if regulation catches up slowly, public opinion puts pressure first. We saw this during the Black Lives Matter movement with Amazon, which was sharing its private surveillance images with Canadian police. This was a way for public institutions to outsource some of their surveillance to private companies with less oversight. Those contracts were forced to be ended.

What are some good general data hygiene principles of privacy-by-design ?

- Do not collect data you don’t need. « Information hoarding », in Sal D’Agostino’s terms, is a thing today. Its also better for the climate, because data servers use a lot of energy, Abigail adds.

Discard sensitive data you had to collect, such as personal identification, as soon as you’ve used it. In his words: “Apply the Mary Kondo method to data. Does it spark joy ? If not, destroy it!“

Cat Coode adds this is the best way not to be on the hook in case of a breach. She adds this is part of the data life cycle plan an SME should have in place.

- To software vendors, Abigail suggests using development frameworks that push data compliance to the edge, ie, to the end user’s device. In this approach, a vendor sees as little of the data as possible. Many cloud offerings are focusing on the zero knowledge architecture.

The absence of data collection on the vendor side is even a selling point, in particular in highly regulated professions like health care.

- Integrate turnkey tools built on privacy and security by design principles into your tech stack, for example to verify identity or age” and then continue from. Abigail says the same way you wouldn’t build and maintain your own payment gateway, it doesn’t make sense to build your own online identity verification tool. Verified.Me is a Canadian example. Yoti is available globally.

You can build decentralised apps using infrastructure like Digi.me or DataSwift instead of creating and managing user accounts.”

Medstack, even though it started for the health care industry, is like having your own privacy-conscious engineer living inside your app. It can even accelerate your time to market with best practices.

- Effective employee privacy and security training is so overlooked, despite being a huge part of the solution. Scott Wright created Click Armor to improve the quality of boring, ineffective security training in companies, with a fun, gamified approach. Try their “Can I be phished” quiz here.

SMEs Depend on the Privacy Practices of Their Third Party Vendors

How many SMEs are aware of the data practice their vendors have? Not enough, says Abigail Dubiniecki. As laws are more and more requiring SMEs to do Data Protection Impact Assessments, and they rely heavily on third parties, they often realize they’re getting short-changed. They may be giving away valuable customer data in exchange for « snake oil », ie, promises that are not truly verified. « Don’t choose vendors that are siphoning the data and leaving you with the liability », she adds.

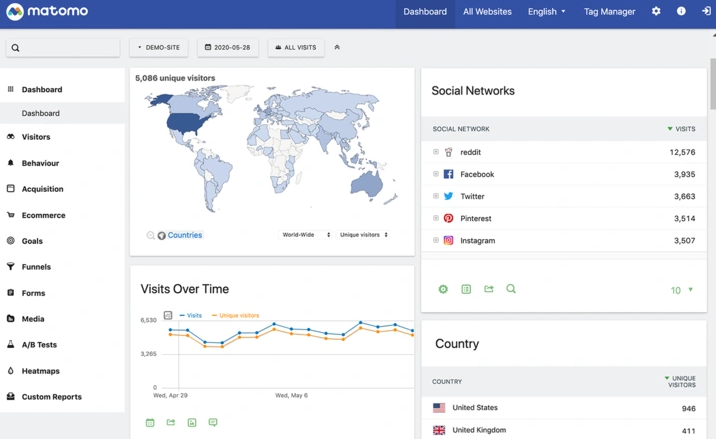

She provides the example of using Google Analytics for the monitoring of online ad campaigns and site traffic. There are alternatives that may not be free but do not give away valuable company data to Google such as Matomo.

As a start-up leader, Scott Wright says he is faced with assessing the risk to company privacy of using third party tools, especially with remote work. His start up Click Armor is faced with decisions on how to architect the data collection requested by the client to protect users’ privacy from the vendor point of view.

In addition to what is visible to the outside, he adds, ie, privacy policies, he says question the business model of third party vendors. If the tool is free, then you ( and your data) are the product.

There are tools today that help citizens « surveil the surveillers », as Sal says. Privacy Badger is a free browser extension that shows website visitors the trackers on each site they’re visiting. The Brave browser does as well. Ghostery is another tool to track the trackers. It comes with a fee, as any honest service does, however.

How are Privacy and Security Connected?

They overlap and sometimes misunderstand each other but they should certainly work together.

As Mark Lizar, of Open Consent, in the audience, said in the chat: « Privacy is better though of as human-centric security ».

To use security to surveil employees constantly while denying them their privacy is a mistake, Abigail adds. The traditional view, says Scott, is that privacy needs security to exist, while Sal adds both help mitigate risk and not addressing the privacy risk at the same time as security risks is not addressing risk thoroughly.

The discussion brought many more points over the following hour. Do have a listen to the video or audio versions available soon! Contact us with feedback or questions as well.

See our French translation of this event recap here: Pourquoi et Comment intégrer la ” privacy by design ” dans son entreprise ou association ?

You may want to read more about the ” surveillance economy “, a term coined by Shoshana Zuboff, a Harvard Professor who wrote The Age of Surveillance Capitalism in 2018, among the many many books I present here crying out about the monopoly power and danger to democracy posed by Big Tech, used to operate by most corporations today.

Expert Bios for Tech: Surveillance By Design ?

- Salvatore D’Agostino represented the vantage point of a cybersecurity expert & surveillance entrepreneur, now involved in setting standards for security, surveillance and identity management solutions. He is the Founder of IDMachines & co-founder of OpenConsent.

- Abigail Dubiniecki , Counsel at nNovation (B.Ed., B.C.L., LL.B., CIPM, CIPP/E ), and Data Privacy Specialist at My InHouse Lawyer, provided a legal point of view on privacy with a very hands-on, practical approach.

- Combining an engineering background with an understanding of privacy regulations in Europe, the US and Canada is Cat Coode, Data Privacy & Security Consultant and founder of Binary Tattoo.

- Finally, in the trenches of building a start up offering gamified training solutions for digital security after over 20 years of IT security and product management experience, we had Scott Wright, CEO of Click Armor.